-

Новости

- ИССЛЕДОВАТЬ

-

Страницы

-

Группы

-

Мероприятия

-

Статьи пользователей

-

Marketplace

-

Форумы

Snowflake Architecture for Modern Data Engineering Pipelines

Introduction

Modern data engineering pipelines must handle massive data volumes, diverse data sources, and real-time processing requirements—all while remaining scalable and cost-efficient. Snowflake has become a core platform for building modern data engineering pipelines due to its cloud-native architecture and ability to separate compute from storage.

For any organization delivering enterprise-grade data engineering services, Snowflake provides a flexible and high-performance foundation to design, deploy, and scale data pipelines efficiently.

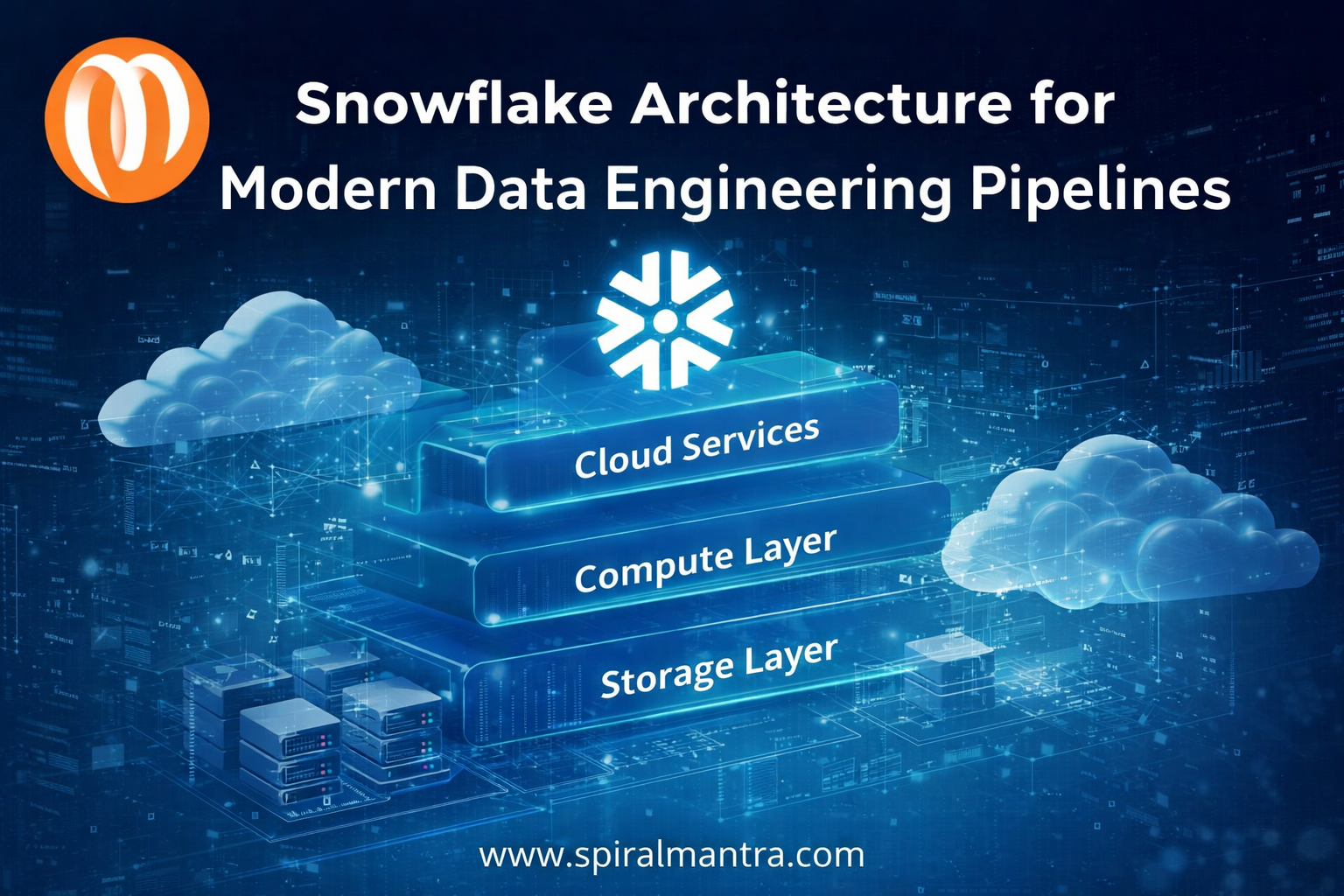

Understanding Snowflake’s Cloud-Native Architecture

Snowflake is built as a fully managed, cloud-native data platform that runs on AWS, Azure, and Google Cloud. Unlike traditional data warehouses, Snowflake was designed from the ground up to support modern data engineering workloads.

Its architecture is divided into three key layers:

-

Storage Layer

-

Compute Layer

-

Cloud Services Layer

This separation is the backbone of Snowflake’s scalability and performance.

Storage Layer: Centralized and Scalable Data Storage

The storage layer in Snowflake stores data in a compressed, columnar format within cloud object storage.

Key capabilities:

-

Automatically manages data partitioning and compression

-

Supports structured and semi-structured data (JSON, Parquet, Avro, XML)

-

Enables near-infinite storage scalability

Data engineers can store raw, curated, and analytics-ready data in the same platform, making Snowflake ideal for modern data engineering services that require centralized and governed data storage.

Compute Layer: Virtual Warehouses for Pipeline Processing

The compute layer consists of virtual warehouses, which are independent compute clusters used to process queries, transformations, and data loads.

Why this matters for data engineering:

-

Compute and storage scale independently

-

Multiple pipelines can run concurrently without contention

-

Warehouses can be resized or paused to optimize costs

This design allows a data engineering company to support batch processing, real-time ingestion, and analytical workloads simultaneously.

Cloud Services Layer: Intelligence and Orchestration

The cloud services layer coordinates all Snowflake activities and handles:

-

Query optimization and execution planning

-

Metadata management

-

Security, access control, and governance

-

Transaction management

For data engineering pipelines, this layer eliminates the need for manual tuning and infrastructure management.

Data Ingestion Architecture in Snowflake

Snowflake supports both batch and streaming data ingestion patterns.

Common ingestion approaches:

-

Snowpipe for continuous, event-driven ingestion

-

Bulk loading from cloud storage (S3, Azure Blob, GCS)

-

Integration with Kafka and third-party ingestion tools

This flexibility allows data engineers to design resilient pipelines capable of handling real-time and high-volume workloads.

ELT-Based Data Transformation Architecture

Snowflake follows a modern ELT (Extract, Load, Transform) approach. Data is first loaded into Snowflake and then transformed using its compute engine.

Benefits of ELT in Snowflake:

-

High-performance SQL-based transformations

-

Reduced pipeline complexity

-

Easy integration with dbt and orchestration tools

This architecture is widely adopted by organizations offering scalable data engineering services and advanced data analytics services.

Pipeline Orchestration and Automation

Snowflake integrates seamlessly with orchestration tools such as:

-

Apache Airflow

-

Prefect

-

Dagster

Data engineers can automate:

-

Data ingestion schedules

-

Transformation dependencies

-

End-to-end pipeline execution

This results in reliable, production-ready pipelines with minimal operational overhead.

Security and Governance Architecture

Snowflake includes built-in security features that are critical for enterprise data engineering:

-

Role-based access control (RBAC)

-

Data encryption at rest and in transit

-

Secure data sharing without duplication

These capabilities help any data engineering company maintain compliance while delivering secure data engineering services.

Performance Optimization in Data Engineering Pipelines

Snowflake optimizes performance automatically using:

-

Query optimization and caching

-

Automatic clustering and pruning

-

Multi-cluster virtual warehouses

Data engineers can focus on pipeline logic instead of infrastructure tuning.

Supporting Analytics, BI, and AI Workloads

Snowflake’s architecture supports downstream use cases such as:

-

Business intelligence dashboards

-

Advanced analytics

-

Machine learning and AI workflows

This makes Snowflake a unified platform for both data engineering services and data analytics services.

Conclusion

Snowflake’s architecture is purpose-built for modern data engineering pipelines. Its separation of compute and storage, cloud-native design, and support for ELT workflows make it a powerful platform for building scalable, secure, and high-performance data systems.

For any data engineering company aiming to deliver future-ready data engineering services, Snowflake provides the architectural flexibility needed to support real-time processing, advanced analytics, and data-driven decision-making at scale.

- Art

- Causes

- Crafts

- Dance

- Drinks

- Film

- Fitness

- Food

- Игры

- Gardening

- Health

- Главная

- Literature

- Music

- Networking

- Другое

- Party

- Religion

- Shopping

- Sports

- Theater

- Wellness